From passport issuing authorities and border protection to law enforcement, security officials around the world make identity judgements about members of the public based on photo-ID every day. Crucially, research tells us that this is a difficult and error-prone task [1-3]. In this article, we will detail some of the most up-to-date research on issues that are key to the use of photos as identification.

Improving photo-ID

When we use photo-ID, an image is compared to a live person or to a second image, and the observer decides whether or not these represent the same person. In the research literature, this is referred to as a face matching task. We know that it is difficult, if not impossible, to train people to improve on this task. In fact, UK police officers [4], Australian passport officers [5], and Australian grocery store cashiers [6] have been shown to be as error-prone as untrained students at face matching tasks. In the latter two studies mentioned, the researchers also recorded each passport officer and cashier’s years of experience in the job. Both studies found that there was no relationship between face matching accuracy and years of experience, meaning that people who had been in the job performing the task for a long time were no better than new recruits.

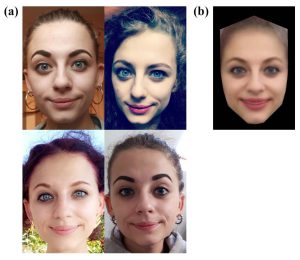

Rather than attempting to improve accuracy through training, recent research has turned to the notion of improving the photo-ID itself. One influential study suggested that using multiple images on a photo-ID document might improve accuracy on a face matching task [7], and this idea has also been reported in a previous edition of this journal [8]. The idea here is that multiple images show a selection of the different ways the person can look (in comparison with the current use of a single, passport-style image), and so can go some way to providing a comprehensive representation of that person to which a new image or a live person can be compared (see Figure 1). Importantly, the original work used only a computerised face matching task. More recent work, using a live person-to-photo task, has found no benefit of multiple images over a single image [9]. Another suggestion for improving photo-ID is to use a face average, combining multiple images of the same person using computer software [7-8], which should also theoretically produce a more representative image of the person (free from the idiosyncrasies of any single photograph; see Figure 1). It has been suggested that the brain might store familiar people as a face average [10-11] but this has been challenged by more recent research which shows that averages of familiar people are neither recognised more quickly nor rated as a better likeness than real images [12]. It is well established that face averages improve algorithm (i.e. machine-based) face recognition performance [13-15], however, two previous reports have shown that human observers do not benefit from the use of face averages [9, 15]. Therefore, despite promising preliminary data, the idea of updating photo-ID documents to include either multiple images or face averages is no longer supported by the most recent research.

Improving morph attack detection

A relatively new area for security concern with photo-ID use is the issue of ‘morph attacks’. This refers to a situation in which two people create a morph image, combining a photo of each of them to create a new image (see Figure 2). If this new morphed image looks sufficiently like the first person in the pair, they can apply for a new passport with this morphed image, obtaining a fraudulently obtained genuine (FOG) document. If the morphed image also looks sufficiently like the second person, then that person can subsequently pass through border control using the FOG passport. Two research articles [16-17], along with reports in this journal [18-19], have provided evidence that, although naïve observers frequently accept morphed images as genuine images, either alerting observers to this type of fraud or training them through a simple feedback task can dramatically improve morph detection rates. More recent research, however, suggests that these reports may lead Department of Defense officials to dramatically underestimate the threat posed by face morphing attacks [20]. In the original artifacts, the face morphs contained artefacts of the morphing process such as the ghost outline of hair or jawlines (see Figure 2, top row). We can be fairly certain, therefore, that training can improve detection rates for these ‘low quality’ (unedited) morphs.

With the advent of freely available image editing software, it is likely that fraudsters would utilise such tools to improve the quality of their morphs by removing artifacts created by the morphing process (see Figure 2, bottom row). Research using more sophisticated morphs, edited to remove obvious flaws in the images [20], has painted a more alarming picture than the original studies. Not only did training fail to improve morph detection rates, performance during the training session was at chance level (i.e., equivalent to guessing). In another experiment in the same study, judges compared morphed and genuine images to a live person standing in front of them. In this task, morphs were accepted as a genuine photo of the person on 49% of trials. Judges were also asked, “Do you have any reason why you wouldn’t accept this as an ID photo?” Of the 1,410 judges tested, only 18 gave reasons that specifically included mention of computer manipulation or similar, e.g., “doesn’t look real”, “looks filtered”, “looks photoshopped”. This presents a worrying picture for security services as there is no evidence to suggest that people can be trained to detect higher quality face morphs. There is, however, hope for the detection of face morphs, and this comes from computer algorithms. In a final study, a basic computer algorithm was able to detect the high quality morphed images significantly better than humans [20]. In an ideal scenario, the best way to tackle these types of morph attacks would be for government officials to directly acquire ID photos at the place of issue, preventing fraudsters from submitting pre-made morph images for consideration. However, this would require a wide-spread systematic change in the procedure for obtaining a passport. Therefore, our recommendation is that security officials do not focus on human morph detection since it is inevitable that morphs will continue to improve in quality and will soon be indistinguishable from genuine photographs. Instead, we champion the use and development of computer algorithms to help in the detection of this kind of fraud.

Making live identity judgements

The vast majority of work on face recognition has focused on testing people at computer screens, comparing one image to another. Although this reflects some aspects of the processing of photo-ID in the real world (e.g., a passport issuing officer comparing a new passport photo to previous images of the applicant), many judgements are made by comparing photo-ID to a live person (see Figure 3). As mentioned above, when a face morph is compared to a live person, the morph is accepted 49% of the time. This presents a dangerously high rate of morph acceptance in live settings.

People may believe that they would be more accurate in judging whether a photo shows the same person as the real person standing in front of them than they would be at judging whether two photos show the same person. In the eyewitness literature, this is referred to as the ‘live superiority hypothesis’ [21]. Research which has tested live face matching – comparing a person to a photo – has shown relatively low accuracy levels of 67% [6], 80% [9] and 83% [22]. As an illustration, Hartsfield-Jackson Atlanta International Airport sees more than 260,000 passengers daily, and so 20% errors would mean 52,000 people being erroneously questioned or being let through using someone else’s passport. These values are not any higher than those observed with computerized versions of face matching tasks, where the standard Glasgow Face Matching Test shows around 80% accuracy [23]. Therefore, evidence suggests there is no live superiority effect in face matching – people are just as error-prone when matching live faces to images as they are at matching two images. Photo-ID is typically used in a live setting, and so we recommend that future research should continue to reflect this by utilizing live tasks.

Humans vs Algorithms

It can be tempting to think of the human brain as a highly complex computer, particularly with the advent of computational neural networks which purport to mimic the brain. It is important, however, to acknowledge differences between human and computer face processing. Familiarity is not a topic covered in this article, but it is worth noting that humans are experts in recognizing familiar faces, and personal familiarity is very difficult to fully computationally model. While cutting edge algorithms (e.g., deep convolutional neural networks) are now performing at levels comparable with forensic facial examiners [24], we know that familiar human viewers remain superior in terms of accuracy.

As mentioned earlier in this article, where the latest research suggests that humans do not benefit from face averages (a computer-generated blend of multiple images of the same person) for recognition [9,15], computers do seem to show accuracy gains [13-15]. In fact, algorithms have been found on multiple occasions to produce 100% accuracy using face averages. In one study, algorithm recognition from single images was 54% but improved to 100% with face averages [13]. Another study showed a cell phone app’s face recognition increased from 86% with single images to 100% with face averages [15]. The same study showed in another experiment that a commercially available face recognition system (FaceVACS-DBScan 5.1.2.0. running Cognitec’s B10 algorithm [25]) was 100% accurate at searching for a target identity in a large database when the target image was an average of the identity [15].

As well as benefiting from averages where humans do not, algorithms can detect high quality face morphs where humans cannot [20]. Indeed, computers are well suited to picking up on imperceptible (at least, to humans) inconsistencies in images between, for example, reflections visible in the eyes and skin [26].

Facial recognition technology is increasingly used in the criminal justice system. Despite the growing appeal of facial recognition systems worldwide, there have been several high profile reports of their failure. A prominent civil liberties group, Big Brother Watch, conducted a series of freedom of information requests, finding that the Metropolitan Police’s use of facial recognition technology has misidentified people in 98% of cases of its use [27] (i.e., the system found “match” images which, in fact, showed a different person). This statistic is particularly important when considering the use of such technologies at events with high Black and minority ethnic populations, such as the well-publicised failure of the Metropolitan Police’s use of facial recognition technology at the Notting Hill Carnival, an event with a high proportion of British African Caribbean attendees. Of concern is the anecdotally-reported failure of facial recognition technologies with non-White faces [28]. This is arguably a more fundamental problem than the issue of civil liberties, which of itself led to San Francisco’s Board of Supervisors recently voting to ban facial recognition technology [29]. Biased algorithms are the product of biased humans – we are more accurate at recognizing people of our own race than other races, and an algorithm trained on White faces will not perform as accurately with non-White faces. It is, therefore, important that we strive to train algorithms on ethnically diverse image sets.

Conclusion

Looking to the future, it is important that we utilize the most up-to-date research and technology in our defense and security systems. It is also vitally important that the use of algorithms is not seen as a fool-proof tool for the task in hand, but that we bear in mind that the algorithm may be as biased as the humans who built it.

References

- Bruce, V., Henderson, Z., Newman, C., & Burton, A. M. (2001). Matching identities of familiar and unfamiliar faces caught on CCTV images. Journal of Experimental Psychology: Applied, 7(3), 207–218.

- Bruce, V., Henderson, Z., Greenwood, K., Hancock, P. J. B., Burton, A. M., & Miller, P. (1999). Verification of face identities from images captured on video. Journal of Experimental Psychology: Applied, 5(4), 339–360.

- Ritchie, K. L., Smith, F. G., Jenkins, R., Bindemann, M., White, D., & Burton, A. M. (2015). Viewers base estimates of face matching accuracy on their own familiarity: Explaining the photo-ID paradox. Cognition, 141, 161-169.

- Burton, A. M., Wilson, S., Cowan, M., & Bruce, V. Face recognition in poor-quality video: Evidence from security surveillance. Psychological Science, 10(3), 243-248.

- White, D., Kemp, R. I., Jenkins, R., Matheson, M., & Burton, A. M. (2014). Passport officers’ errors in face matching. PLoS ONE, 9, e103510.

- Kemp, R., Towell, N., & Pike, G. (1997). When seeing should not be believing: Photographs, credit cards and fraud. Applied Cognitive Psychology, 11, 211–222.

- White, D., Burton, A. M., Jenkins, R., & Kemp, R. (2014). Redesigning photo-ID to improve unfamiliar face matching performance. Journal of Experimental Psychology: Applied, 20, 166–173.

- Robertson, D. J., & Burton, A. M. (2016). Unfamiliar face recognition: Security, surveillance and smartphones. HDIAC Journal, 3(1), 15-21.

- Ritchie, K. L., Mireku, M. O., & Kramer, R. S. S. (2019). Face averages and multiple images in a live matching task. British Journal of Psychology. Advance online publication.

- Burton, A. M., Jenkins, R., Hancock, P. J. B., & White, D. (2005). Robust representations for face recognition: The power of averages. Cognitive Psychology, 51(3), 256–284.

- Robertson, D. J. (2015). Spotlight: Face recognition improves security. HDIAC

- Ritchie, K. L., Kramer, R. S. S., & Burton, A. M. (2018). What makes a face photo a ‘good likeness’? Cognition, 170, 1-8.

- Jenkins, R., & Burton, A. M. (2008). 100% accuracy in automatic face recognition. Science, 319, 435.

- Robertson, D. J., Kramer, R. S. S., & Burton, A. M. (2015). Face averages enhance user recognition for smartphone security. PLoS ONE, 10(3), e0119460.

- Ritchie, K. L., White, D., Kramer, R. S. S., Noyes, E., Jenkins, R., & Burton, A. M. (2018). Enhancing CCTV: Averages improve face identification from poor-quality images. Applied Cognitive Psychology, 32, 671-680.

- Robertson, D. J., Kramer, R. S. S., & Burton, A. M. (2017). Fraudulent ID using face morphs: Experiments on human and automatic recognition. PLoS ONE, 12(3), e0173319.

- Robertson, D. J., Mungall, A., Watson, D. G., Wade, K. A., Nightingale, S. J., & Butler, S. (2018). Detecting morphed passport photos: A training and individual differences approach. Cognitive Research: Principles and Implications, 3(27), 1-11.

- Robertson, D. J. (2018). Face recognition: Security contexts, super-recognizers, and sophisticated fraud. HDIAC Journal, 5(1), 7-10.

- Robertson, D. J. (2017). Spotlight: Face morphs – a new pathway to identity fraud. HDIAC

- Kramer, R. S. S., Mireku, M. O., Flack, T. R., & Ritchie, K. L. (2019). Face morphing attacks: Investigating detection with humans and computers. Cognitive Research: Principles and Implications, 4, 28.

- Fitzgerald, R. J., Price, H. L., & Valentine, T. (2018). Eyewitness identification: Live, photo, and video lineups. Psychology, Public Policy and Law, 24(3), 307–325.

- Megreya, A. M., & Burton, A. M. (2008). Matching faces to photographs: Poor performance in eyewitness memory (without the memory). Journal of Experimental Psychology: Applied, 14, 364–372.

- Burton, A. M., White, D., & McNeill, A. (2010). The Glasgow face matching test. Behavior Research Methods, 42(1), 286-291.

- Phillips, P. J., Yates, A. N., Hu, Y., Hahn, C. A., Noyes, E., Jackson, K., … O’Toole, A. J. (2018). Face recognition accuracy of forensic examiners, superrecognizers, and face recognition algorithms. Proceedings of the National Academy of Sciences, 115(24), 6171-6176.

- Cognitec FaceVACS DBScan. 2017. Available from: http://www.cognitec.com/facevacs-dbscan.html Accessed 1/8/2016

- Seibold, C., Hilsmann, A., & Eisert, P. (2018, July 5). Reflection analysis for face morphing attack detection. Retrieved from http://arxiv.org/pdf/1807.02030.pdf

- Face off: The lawless growth of facial recognition in UK policing. Big Brother Watch, May 2018. Available from https://bigbrotherwatch.org.uk/wp-content/uploads/2018/05/Face-Off-final-digital-1.pdf

- Facial recognition is accurate, if you’re a white guy. New York Times. Available from https://www.nytimes.com/2018/02/09/technology/facial-recognition-race-artificial-intelligence.html

- San Francisco bans facial recognition technology. New York Times. Available from https://www.nytimes.com/2019/05/14/us/facial-recognition-ban-san-francisco.html