The prospect of using artificial intelligence (AI) in warfare is complicated. Arguments exist both for and against the development of weaponized AI [1]. Although ideologies differ among the highest levels of government and throughout the global community [2–4], the very fact that this idea exists must give pause for further evaluation.

No matter the outcome, AI has already changed both defense and national security strategy. In a statement to the Senate Armed Services Committee in 2016, former Director of National Intelligence, James Clapper, eloquently and comprehensively summed up the situation by stating, “Implications of broader AI deployment include increased vulnerability to cyberattack, difficulty in ascertaining attribution, facilitation of advances in foreign weapon and intelligence systems, the risk of accidents and related liability issues, and unemployment. Although the United States leads AI research globally, foreign state research in AI is growing [5].”

The importance of AI to defense and security strategy influences how the U.S. government and military plans for the future. The January 2018 National Defense Strategy underlined the notion that the Department of Defense (DoD) should invest heavily in the military application of AI and autonomy, as they belong to “the very technologies that ensure we will be able to fight and win the wars of the future [6].”

Likewise, the December 2017 National Security Strategy acknowledged the risks that AI poses to the nation, while also reaffirming the U.S.’s commitment to investing in AI research [7].

Artificial Intelligence and Autonomous Systems in Defense Applications

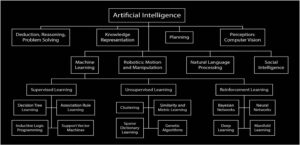

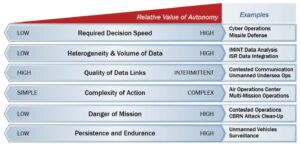

AI has no standardized definition, but it is generally understood as referring to any computerized system capable of exhibiting a level of rational behavior high enough to solve complex problems [8]. The concept grew out of work that defeated Germany’s “Enigma” code during World War II, primarily pushed by Alan Turing [9, 10]. The U.S. military has been highly engaged in AI research for nearly 70 years [11]. As a broad term, AI spans a wide range of approaches, including the branch of machine learning (ML), which encompasses deep-learning (see Figure 1). AI also encompasses autonomous systems, as AI is the “intellectual foundation for autonomy [12].” It is difficult to discuss weaponization of AI without discussing autonomous systems.

Figure 1: Approaches and disciplines in artificial intelligence and machine learning [61]

Integrating AI into a new paradigm of defense strategy is important for two reasons. First, the technology is available, so it makes sense for the U.S. to pursue technological superiority over its adversaries in this realm. Second, doing so is necessary in order to counter the advances in (and emerging proficiencies of) new technologies developed by Russia and China.

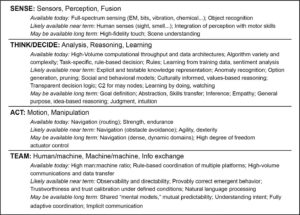

Table 1: Projected capabilities for autonomous systems [12]

Artificial Intelligence as a Weapon of Mass Destruction

Existing applications of AI to Weapons of Mass Destruction (WMDs) are extremely limited. Thus, developments within the technology have to be extrapolated to determine how they may be used in WMDs. However, due to AI’s unpredictable nature and its potential use in fully- and semi-autonomous weapons systems, scenarios may exist where AI changes the game, so to speak: situations not typically thought of as WMD-relevant could become such. The use of autonomy in weapon systems has grown swiftly over the past 50 years. However, few of these systems are fully autonomous and able to make decisions on their own by using AI, but that is the likely next step, as evidenced by the intentions of many nation-states.

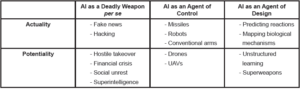

Existing autonomous platforms merely set the stage for what is to come in terms of weapons with artificially intelligent capabilities. The use of AI in WMDs, while complex, can be simplified into a matrix (see Table 2) that illustrates two characteristics: (1) mode, which illustrates the way that AI could be used for the purposes of mass destruction, including as a weapon in itself, as an agent to control other weapons platforms, and as an agent used to design WMDs; and (2) temporality, demonstrated by how AI is currently being used in WMDs or advanced weaponry (actuality) versus emerging developments in AI technology that could be used in WMDs or as WMDs (potentiality). The mode of AI is more complicated than its temporality. In this sense, there are three possibilities.

As a Deadly Weapon per se

AI itself could be the weapon (deadly weapon per se), as in a program that finds the best route for hacking into a system [17] or one that propagates the spreading of falsities on social media by imitating human actors [18]. Fears of AI being used to support WMDs can be traced back to at least 1987, where Yazdani and Whitby argued AI at the time was increasing “the possibility of an accidental nuclear war [19].” These fears carry over to the present, and are possibly even more justified as AI technology flourishes. Roman Yampolskiy, an associate professor of computer engineering at the University of Louisville, contended in January 2017 that “weaponized AI is a weapon of mass destruction and an AI Arms Race is likely to lead to an existential catastrophe for humanity [20].” A survey conducted in August 2017 at the Black Hat USA Conference found that 62 percent of survey participants (briefly described as “the best minds in cybersecurity”) believed AI would be used as a weapon within the upcoming year [21].

AI-based systems are becoming more common and more intelligent. These programs have already beaten expert human players at games, such as chess [22] and Go [23], and continue to surprise humans with their capacity. For example, chatbots created by Facebook were able to communicate with a language they developed [24, 25], and Google developed neural networks that designed an unbreakable encryption method to keep their conversations secret [26]. However, a darker side to AI programing exists as well, demonstrated by surprising, off-color remarks from Microsoft chatbots Tay and Zo, including being manipulated into defending controversial ideologies as well as making unprompted comments that could be seen as hate speech, respectively [27, 28]. Moving forward, the fear is that AI could lead to a social crisis of epic proportion by creating massive unemployment and a financial catastrophe [29], or even the onset of the technological singularity, the point at which machine intelligence exceeds human intelligence [30]. Although these scenarios may not seem like the results of a conventional WMD, they could usher in a great unknown with potentially life-changing circumstances.

As an Agent of Control

The second mode (agent of control) is much easier to conceptualize, in that AI is used to control an object, such as a missile or robot. This has been done for decades, and continues with systems such as the P-800 Oniks missile (SS-N-26 Strombile) [31]; missiles proposed for the planned Tupolev PAK DA bomber [32]; the Long-range Anti-Ship Missile (LRASM) [33]; and the Epsilon Launch Vehicle [34]. Each of these rocket/propulsion systems is capable of providing foundational technology for more advanced usage of AI in larger WMD systems. Most likely, the integration of AI into the guidance and control systems of these types of platforms will continue.

Figure 2: Autonomy derives operational value across a diverse array of vital DoD missions [12]

There are implications that I call the “terminator conundrum.” What happens when that thing can inflict mortal harm and is empowered by artificial intelligence. How are we going to deal with that? How are we going to know what’s in the vehicle’s mind, presuming for the moment we are capable of creating a vehicle with a mind. It’s not just a programmed thing that drives a course or stays on the road or keeps you between the white lines and the yellow lines, doesn’t let you cross into oncoming traffic, but can actually inflict lethal damage to an enemy and has an intelligence of its own. How do we document that? How do we understand it? How do we know with certainty what it’s going to do? [35].

General Selva’s “terminator conundrum” has been echoed by the Department of Homeland Security (DHS). A 2017 report on AI stated, “Robots could kill mankind, and it is naive not to take the threat seriously [29].” This fear is becoming reality. For example, when asked if she wanted to destroy humans, Hanson Robotics’ Sophia, responded, “OK. I will destroy humans [36].” However, the future form of robots as WMDs may be heading in the direction of the Russian-created Final Experimental Demonstration Object Research, which is capable of replicating complex human movements including firing weapons [37]. The growth of autonomous vehicles for defense applications (especially UAVs and unmanned ground vehicles) will continue, and fundamental questions regarding robotic operations, especially in combat, must be answered [38], but whether or not these could be or would be considered WMDs is open for debate.

As an Agent of Design

Finally, the third mode of AI is its use in design. AI techniques could be used to develop weapons, some of which may not be designs previously conceived by human beings. In other words, AI could use its unique perspectives, as demonstrated by behavior from the Google neural network and Facebook chatbots, to create weapon designs that humans may not have otherwise conceptualized. Little currently exists in the field of AI as it applies to the design of WMDs, but lessons from other areas may provide some insight into how this could be possible. Predicting the outcomes of chemical reactions (particularly in organic chemistry) can be quite challenging. However, last year, several researchers showed how AI can be used to predict the outcomes of complex reactions with greater accuracy than other methods [39, 40].

Using AI to maximize the products of a reaction could create chemical superweapons with massive yields. Research partially funded by the U.S. Army Medical Research and Materiel Command reverse-engineered the complex mechanism of regeneration in planarians by searching the genome with AI [41]. While the intent of the work was focused on understanding cellular and tissue regeneration for medical applications, this method could be used to reverse-engineer the most lethal characteristics of an organism and design a super bioweapon focused on enhancing this trait.

Most AI functions with supervised learning as the backbone of how it performs tasks, meaning the AI sorts images and patterns into pre-programmed categories that have been developed by humans and programmed into the AI. For example, researchers may feed millions of images of trees into an artificial neural network (ANN) (artificial creation that mimics the functions of neurons in a human brain) so that the program can learn what a tree looks like. Based on these images, researchers may also program tree categories (e.g., pine, maple) so that the AI, by way of the ANN, can categorize images of trees based on type.

Table 2: Classification of AI as WMDs

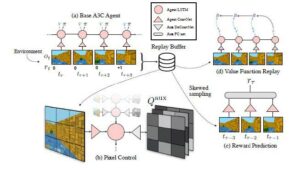

The opposite of this is unsupervised learning, in which the AI has no pre-programmed categories, but rather is allowed to design its own. The machine would sort patterns or images into what it thinks would be logical categories (which may not overlap with human-generated categories). In 2015, researchers at Google fed white noise into an ANN, and let the AI use what it learned from the millions of images it had in its database. Through a process that Google has nicknamed “inceptionism,” bizarre, yet beautiful images are created by the ANN [42]. Likewise, Google has also developed the UNsupervised REinforcement and Auxiliary Learning (UNREAL) agent (see Figure 3), which has improved the speed of learning certain applications by a factor of 10.

To date, AI has been used in weapon systems or to understand relatively common aspects of chemistry and biology. However, what if AI creates something that never existed before? What if it generates a new class of weapon never imagined by human beings? Unsupervised learning used in this capacity has the potential to do just that.

Counterbalance

The difficulty in assessing how AI could be a WMD threat is a product of the fact that so little information exists regarding this concept. Most likely, evaluating AI uses in conventional weaponry would be limiting. However, as stated by DHS, “Humans must maintain control over machines [29].” Arguably, the two greatest fears (and threats) of AI are its use in autonomous weapon systems and the loss of human control. A number of actions have been taken in regards to these threats. Three categories describe the level of autonomy a system can have:

- Human-in-the-Loop Weapons – Robots that can select targets and deliver force only with a human command;

- Human-on-the Loop Weapons – Robots that can select targets and deliver force under the oversight of a human operator who can override the robots’ actions; and

- Human-out-of-the Loop Weapons – Robots that are capable of selecting targets and delivering force without any human input or interaction [43].

The DoD adheres to the human-in-the-loop principle, as evidenced by DoD Directive 3000.09 [44]. In fact, the U.S. is the first country to proclaim a formal policy on autonomous weapons systems [45]. In addition, “DoD personnel must comply with the law of war, including when using autonomous or unmanned weapon systems” as directed by the DoD Law of War Program (DoD Directive 2311.01E) [46, 47].

The challenge, however, lies in the fact that although the U.S. and its allies adhere to developing human-in-the-loop systems—or even human-on-the loop systems—other countries may not, and probably will not. Former Deputy Secretary Bob Work acknowledged this challenge in 2015 when he stated, “Now, we believe, strongly, that humans should be the only ones to decide when to use lethal force. But when you’re under attack, especially at machine speeds, we want to have a machine that can protect us [48].” Ironically, AI may be the greatest counter to AI in some cases, at least in principle. AI may also be the best counter to non-AI WMDs. Programs such as Synchronized Net-Enabled Multi-INT Exploitation have enabled DoD to more efficiently exploit an adversary’s weakness [49]. Research by University of Missouri researchers has shown that a deep-learning program could reduce the amount of time needed to identify surface-to-air missile sites from 60 hours to 42 minutes [50].

Unfortunately, this interplay has possibly already created an arms race, and as Edward Geist, a MacArthur Nuclear Security Fellow at Stanford University argues, the only thing that can be done now is to manage it [51]. Significant efforts in the global community have sought to understand the implications of AI both for its use in WMD development and as a WMD counterbalance. The United Nations Interregional Crime and Justice Research Institute (UNICRI) has led many of the efforts in this area, beginning with the announcement of its programme on AI and Robotics in 2015, and the launch of the Centre on Artificial Intelligence and Robotics in 2016 [52].

Figure 3: Overview of the UNREAL agent [62]

The potential uses of AI as, or in, a WMD are still very much up for debate. Even so, many in the scientific and even political communities have taken action against the weaponization of AI in general. More than 20,000 prominent figures across the world have called for the exercise of caution when it comes to developing AI for non-beneficial uses [55]. Additionally, a group of AI researchers and ethicists have developed a list of 23 principles—mirroring the Asilomar Principles developed in response to the use of recombinant DNA—intended to drive the responsible innovation and development of AI [56]. Google’s DeepMind has supported the development of an AI “kill switch” that could, as a last resort, shut down a system if control were lost [57]. The future of AI is uncertain, especially as it applies to WMDs, but one thing is certain: there has been no lack of concern regarding what a future with weaponized AI could look like [58, 59].

Conclusion

Near- and long-term applications of AI in WMD development are uncertain, but the technology exists and improves every day. The uncertainty of the use of AI in WMD development rather than the potential for what it could create is the greater concern in regards to AI as a WMD and as a weaponized AI in general. Some regard AI’s military applications as potentially more transformative than the advent of nuclear weapons [60]. Although DoD has taken measures to ensure that humans remain in ultimate control of machines, it is not known how long this may last. For now at least, two things are clear: AI research in general should proceed with caution, and follow the principles of responsible innovation. Moreover, government and military officials must be prepared to face the certainty of AI in an uncertain future.

References

1. Roff, H. M., & Moyes, R. (2016). Meaningful human control, artificial intelligence and autonomous weapons. Briefing paper prepared for the Informal Meeting of Experts on Lethal Autonomous Weapons Systems, UN Convention on Certain Conventional Weapons, Geneva. Retrieved from http://www.article36.org/wp-content/uploads/2016/04/MHC-AI-and-AWS-FINAL.pdf

2. Kerr, I., Bengio, Y., Hinton, G., Sutton,R., & Precup, D. (n.d.) RE: AN INTERNATIONAL BAN ON THE WEAPONIZATION OF AI [Letter written November 2, 2017 to The Right Honourable Justin Trudeau, P.C., M.P.]. Retrieved from https://techlaw.uottawa.ca/bankillerai#letter

3. Frew, W. (2017, November 7). Killer robots: Australia’s AI leaders urge PM to support a ban on lethal autonomous weapons. Retrieved from https://newsroom.unsw.edu.au/news/science-tech/killer-robots-australia%E2%80%99s-ai-leaders-urge-pm-support-ban-lethal-autonomous-weapons

4. Future of Life Institute. (n.d.). An open letter to the United Nations Convention on Certain Conventional Weapons. Retrieved from https://futureoflife.org/autonomous-weapons-open-letter-2017/

5. Clapper, J. R. (2016). Statement for the record: Worldwide threat assessment of the U. S. Intelligence Community. Office of the Director of National Intelligence. Retrieved from https://www.dni.gov/files/documents/SASC_Unclassified_2016_ATA_SFR_FINAL.pdf

6. U.S. Department of Defense. (2018). Summary of the National Defense Strategy of the United States of America. Retrieved from https://www.defense.gov/Portals/1/Documents/pubs/2018-National-Defense-Strategy-Summary.pdf

7. The White House. (2017). National Security Strategy of the United States of America. Retrieved from https://www.whitehouse.gov/wp-content/uploads/2017/12/NSS-Final-12-18-2017-0905.pdf

8. Executive Office of the President, National Science and Technology Council Committee on Technology. (2016). Preparing for the Future of Artificial Intelligence (Rep.). Washington, DC. Retrieved from https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf

9. Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59(236), 433–460. Retrieved from http://www.jstor.org/stable/2251299

10. Rejewski, M. (1981). How Polish mathematicians deciphered the Enigma.IEEE Annals of the History of Computing, 3(3), 213-234. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.692.9386&rep=rep1&-type=pdf

11. National Research Council. (1999). Funding a revolution: Government support for computing research. Washington, DC: The National Academies Press. Retrieved from https://doi.org/10.17226/6323

12. Defense Science Board. (2016, June). Defense Science Board summer study on autonomy. Washington, DC. Retrieved from https://www.hsdl.org/?abstract&-did=794641

13. Office of the Under Secretary of Defense (Comptroller)/Chief Financial Officer. (2017). Program acquisition cost by weapon system: United States Department of Defense Fiscal Year 2018 budget request (Rep.). Retrieved from http://comptroller.defense.gov/Portals/45/Documents/defbudget/fy2018/fy2018_Weapons.pdf

14. U.S. Department of Defense, Defense Science Board. (2012). Task force report: The role of autonomy in DoD systems (Rep.). Retrieved from https://fas.org/irp/agency/dod/dsb/autonomy.pdf

15. Arkin, R. (2013). Lethal autonomous systems and the plight of the non-combatant. AISB Quarterly, 137, 4-12. Retrieved from http://www.aisb.org.uk/publications/aisbq/AISBQ137.pdf

16. Webster, G., Creemers, R., Triolo, P., & Kania, E. (2017). China’s plan to ‘lead’ in AI: Purpose, prospects, and problems [Web log post]. Retrieved from https:// www.newamerica.org/cybersecurity-initiative/blog/chinas-plan-lead-ai-purposeprospects-and-problems/

17. Metz, C. (2016, August 5). Hackers don’t have to be human anymore: This bot battle proves it. Wired. Retrieved from https://www.wired.com/2016/08/securitybots-show-hacking-isnt-just-humans/

18. Chessen, M. (2017). The MADCOM Future: How artificial intelligence will enhance computational propaganda, reprogram human culture, and threaten democracy…and what can be done about it (Rep.). Washington, DC: Atlantic Council. Retrieved from http://www.atlanticcouncil.org/publications/reports/the-madcom-future

19. Yazdani, M., & Whitby, B. (1987). Accidental nuclear war: The contribution of artificial intelligence. Artificial Intelligence Review, 1(3), 221-227. doi:10.1007/bf00142294

20. Conn, A. (2017, January 18). Roman Yampolskiy interview. Future of Life Institute. Retrieved from https://futureoflife.org/2017/01/18/roman-yampolskiy-interview/

21. Cylance. (2017, August 1). Black Hat attendees see AI as double-edged sword. Retrieved from https://www.cylance.com/en_us/blog/black-hat-attendees-see-aias-double-edged-sword.html

22. Krauthammer, C. (1997, May 26). Be afraid: The meaning of Deep Blue’s victory. The Weekly Standard. Retrieved from http://www.weeklystandard.com/beafraid/article/9802

23. Etherington, D. (2017, May 23). Google’s AlphGo AI beats the world’s best human Go player. Retrieved from https://techcrunch.com/2017/05/23/googles-alphago-ai-beats-the-worlds-best-humango-player/

24. Simonite, T. (2017, August 1). No, Facebook’s chatbots will not take over the world. Wired. Retrieved from https://www.wired.com/story/facebooks-chatbots-willnot-take-over-the-world/

25. Lewis, M., Yarats, D., Dauphin, Y., Parikh, D., & Batra, D. (2017). Deal or no deal?End-to-end learning of negotiation dialogues. Proceedings of the 2017 Conference

on Empirical Methods in Natural Language Processing. doi:10.18653/v1/d17-1259

26. Abadi, M. & Andersen, D. (2016). Learning to protect communications with adversarial neural cryptography. Retrieved from https://arxiv.org/abs/1610.06918

27. Lee, P. (2016, March 25). Learning from Tay’s introduction [Web log post]. Retrieved from https://blogs.microsoft.com/blog/2016/03/25/learning-tays-introduction/

28. Price, R. (2017, July 24). Microsoft’s AI chatbot says Windows is ‘spyware’. Retrieved from http://www.businessinsider.com/microsoft-ai-chatbot-zo-windows-spyware-tay-2017-7

29. U.S. Department of Homeland Security. (2017). Narrative analysis: Artificial intelligence (Rep.). Retrieved from https://info.publicintelligence.net/OCIA-ArtificialIntelligence.pdf

30. Shanahan, M. (2015). The technological singularity. Cambridge, MA: The MIT Press. Retrieved from https://mitpress.mit.edu/books/technological-singularity

31. Litovkin, N., & Litovkin, D. (2017, May

31). Russia’s digital doomsday weapons: Robots prepare for war. Retrieved from https://www.rbth.com/defence/2017/05/31/russias-digi -tal-weapons-robots-and-artificial-intelligence-

prepare-for-wa_773677

32. Wood, L. T. (2017, July 21). Russia to develop missile featuring artificial intelligence. The Washington Times. Retrieved from http://www.washingtontimes.com/news/2017/jul/21/russia-develop-missile-featuring-artificial-intell/

33. Mizokami, K. (2016, February 25). The Navy’s new AI missile sinks ships the smart way. Popular Mechanics. Retrieved from http://www.popularmechanics.com/ military/weapons/a19624/the-navys-newmissile-sinks-ships-the-smart-way/

34. Japan Aerospace Exploration Agency. (n.d.). Epsilon Launch Vehicle. Retrieved from http://global.jaxa.jp/projects/rockets/epsilon/

35. T39. The Brookings Institution. (2016,January 11). Trends in military technology and the future force. Retrieved from https://www.brookings.edu/events/trendsin-military-technology-and-the-futureforce/

36. Edwards, J. (2017, November 8). I interviewed Sophia, the artificially intelligent robot that said it wanted to “destroy humans.” Retrieved from http://www. businessinsider.com/interview-with-sophia-ai-robot-hanson-said-it-would-destroy-humans-2017-11

37. O’Connor, T. (2017, April 19). Russia built a robot that can shoot guns and travel to space. Newsweek. Retrieved from http://www.newsweek.com/russiabuilt-robot-can-shoot-guns-and-travelspace-586544

38. Metz, S. (2014). Strategic insights: The land power robot revolution is coming.Retrieved from http://ssi.armywarcollege.edu/index.cfm/articles/Landpower-Robot-Revolution/2014/12/10

39. Coley, C. W., Barzilay, R., Jaakola, T. S., Green, W. H., & Jensen, K. F. (2017).Prediction of organic reaction outcomes using machine learning. ACS Central Science, 3(5), 434–443. doi:10.1021/acscentsci.7b00064

40. Skoraczyński, G., Dittwald, P., Miasojedow, B., Szymkuć, S., Gajewska, E. P., Grzybowski, B. A., & Gambin, A. (2017). Predicting the outcomes of organic reactions via machine learning: Are current descriptors sufficient? Scientific Reports, 7(1). doi:10.1038/s41598-017-02303-0

41. Lobo, D., & Levin, M. (2015). Inferring regulatory networks from experimental morphological phenotypes: A computational method reverse-engineers planarian regeneration. PLOS Computational Biology, 11(6). doi:10.1371/journal. pcbi.1004295

42. Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., . . . Rabinovich, A. (2015). Going deeper with convolutions. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/cvpr.2015.7298594

43. Human Rights Watch. (2015, April 9). Mind the gap: The lack of accountability for killer robots. Retrieved from https://www.hrw.org/report/2015/04/09/mindgap/lack-accountability-killer-robots

44. U.S. Department of Defense. (2017). DoD Directive 3000.09. Retrieved from http://www.esd.whs.mil/Portals/54/Documents/DD/issuances/dodd/300009p.pdf

45. Gubrud, M. (2012, November 27). DoD Directive on autonomy in weapon systems. Retrieved from https://www.icrac.net/dod-directive-on-autonomy-in-weapon-systems/

46. U.S. Department of Defense. (2011). DoD Directive 2311.01E. Retrieved from http://www.esd.whs.mil/Portals/54/Documents/DD/issuances/dodd/231101e.pdf

47. U.S. Department of Defense. (2011). Unmanned Systems Integrated Roadmap FY2011-2036 (Rep. No. 11-S-3613). Retrieved from https://fas.org/irp/program/collect/usroadmap2011.pdf

48. Work, B. (2015, December 14). CNAS Defense Forum [Speech]. U.S. Department of Defense. Retrieved from https://www.defense.gov/News/Speeches/Speech-View/Article/634214/cnas-defense-forum/

49. Freedberg, S. J. (2017, July 13). Artificial intelligence will help hunt Daesh by December. Breaking Defense. Retrieved from http://breakingdefense.com/2017/07/artificial-intelligence-will-help-hunt-daesh-by-december/

50. Marcum, R. A., Davis, C. H., Scott, G. J., & Nivin, T. W. (2017). Rapid broad area search and detection of Chinese surface-to-air missile sites using deep convolutional neural networks. Journal

of Applied Remote Sensing, 11(04), 1. doi:10.1117/1.jrs.11.042614

51. Geist, E. M. (2016). It’s already too late to stop the AI arms race—We must manage it instead. Bulletin of the Atomic Scientists, 72(5), 318-321. doi:10.1080/00963 402.2016.1216672

52. United Nations Interregional Crime and Justice Research Institute. (n.d.). UNICRI Centre for Artificial Intelligence and Robotics. Retrieved from http://www.unicri.it/in_focus/on/UNICRI_Centre_Artificial_Robotics

53. Nichols, G., Haupt, S., Gagne, D. Rucci, A., Deshpande, G., Lanka, P., & Youngblood, S. (2018). State of the Art Report: Artificial intelligence and machine learning for defense applications (Rep.). Homeland Defense and Security Information Analysis Center. Manuscript in preparation.

54. Warnke, P. (2017, October 6). The effect of new technologies on nuclear non-proliferation and disarmament: Artificial intelligence, hypersonic technology and outer space. United Nations Office for Disarmament Affairs. Retrieved from https://www.un.org/disarmament/update/the-effect-of-new-technologies-on-nuclear-non-proliferation-and-disarmament-artificial-intelligence-hypersonic-technology-and-outer-space/

55. Future of Life Institute. (n.d.). The 24189 Open Letter Signatories Include. Retrieved from https://futureoflife.org/awos-signatories/

56. Future of Life Institute. (n.d.). Asilomar AI Principles. Retrieved from https://futureoflife.org/ai-principles/

57. Orseau, L., & Armstrong, S. (2016). Safely interruptible agents. In Uncertainty In Artificial Intelligence: Proceedings of the Thirty-Second Conference (2016) (pp. 557). Jersey City, NJ: Conference on Uncertainty in Artificial Intelligence. Retrieved from http://www.auai.org/uai2016/proceedings/papers/68.pdf

58. Johnson, B. D., Vanatta, N., Draudt, A., & West, J. R. (2017). The new dogs of war: The future of weaponized artificial intelligence (Rep.). West Point, NY: Army Cyber Institute. Retrieved from http://www.dtic.mil/docs/citations/AD1040008

59. Brundage, M., Avin, S., Clark, J., Toner,H. Eckersley, P., Garfinkel, B., . . . Amodei, D. (2018). The malicious use of artificial Intelligence: Forecasting, prevention, and mitigation (Rep.). Retrieved from https://img1.wsimg.com/blobby/go/3d-82daa4-97fe-4096-9c6b-376b92c619de/downloads/1c6q2kc4v_50335.pdf

60. Allen, G., & Chan, T. (2017). Artificial intelligence and national security (Rep.). Cambridge, MA: Belfer Center for Science and International Affairs, Harvard Kennedy School. Retrieved from https://www.belfercenter.org/sites/default/files/files/publication/AI%20NatSec%20-%20final.pdf

61. De Spiegeleire, S., Maas, M., & Swejis, T. (2017). Artificial Intelligence and the Future of Defense: Strategic implications for small- and medium-sized force providers (Rep.). The Netherlands: The Hague Centre for Strategic Studies. Retrieved from https://hcss.nl/report/artificial-intelligence-and-future-defense

62. Jaderberg, M., Mnih, V., Czarnecki W. M., Schaul, T., Leibo, J. Z., Silver, D., & Kavukcuoglu, K. (2016). Reinforcement learning with unsupervised auxiliary tasks. Retrieved from https://arxiv.org/abs/1611.05397